Dealing with Outliers: When to Remove, Replace, or Adjust

Choosing the right approach to handle outliers in the datatset is crucial for maintaining data integrity and model performance.

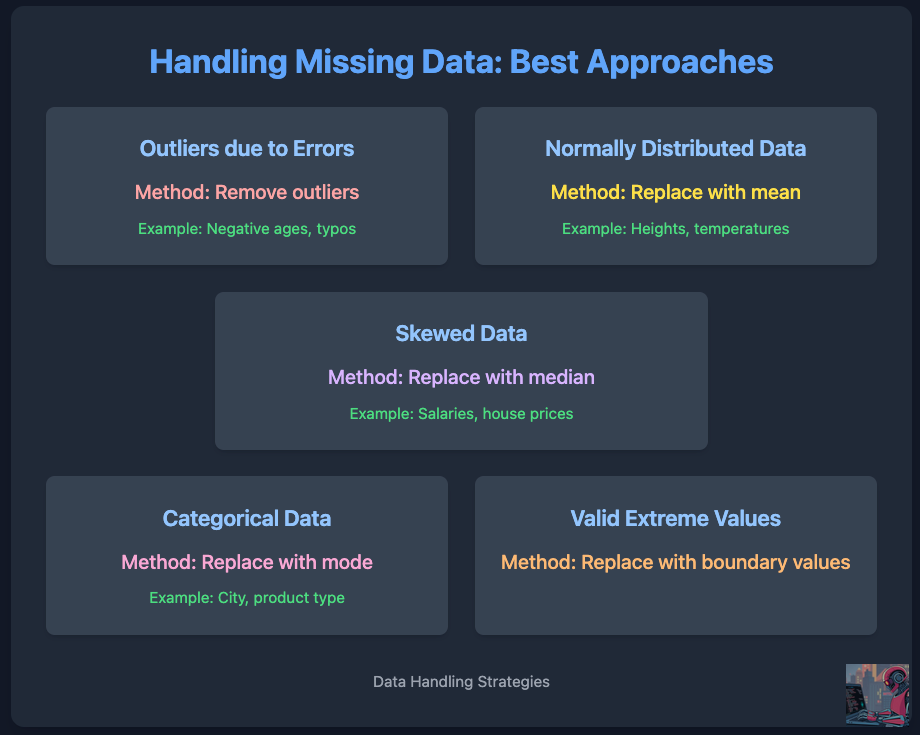

Outliers can significantly impact machine learning models. They may arise due to errors, natural variations, or extreme values in a dataset. Choosing the right approach to handle them is crucial for maintaining data integrity and model performance. Here's a structured guide to managing outliers based on different scenarios.

Removing Outliers

Removing outliers is often the best approach when they result from data entry errors or sensor malfunctions. Keeping erroneous values can distort statistical analyses and degrade model accuracy.

Identification Methods

Boxplots to detect values outside the interquartile range (IQR)

Z-scores to identify points beyond ±3 standard deviations

Domain knowledge to verify data validity

Example

A dataset tracking employee ages contains an entry of 350 years. Since this is an error, it should be removed.

Replacing with Mean

Replacing outliers with the mean preserves overall statistical properties for normally distributed data.

Example

In a dataset of student test scores following a normal distribution, if a few scores are extreme due to data corruption, replacing them with the mean can help maintain consistency.

Replacing with Median

For skewed datasets, the median is a better alternative to the mean because it is less sensitive to extreme values.

Example

In salary data, where a few executives earn significantly more than the majority, replacing missing or erroneous values with the median prevents distortion.

Replacing with Mode

For categorical features, using the mode—the most frequently occurring value—helps maintain consistency without introducing bias.

Example

If some city names are missing in a dataset, filling them with the most common city ensures uniformity without introducing unrealistic values.

Using Boundary Values

When extreme values are valid but highly skewed, capping them at a predefined threshold (Winsorization) can prevent undue influence on the model.

Example

In real estate price data, rather than keeping an outlier value of $50 million, capping it at the 99th percentile reduces the impact of extreme observations while preserving distribution characteristics.

Conclusion

Handling outliers correctly is essential for building robust machine-learning models. The key is understanding the nature of the outliers and applying the appropriate technique based on the data distribution and problem context.